Dr Mario Bojilov has worked in Business, Consulting, and Higher Education since 1994. He has taught 1,500+ university students and consulted 650+ enterprise professionals in the areas of Finance, Digital Transformation, Digital Risk and Audit. He holds a Master of Engineering Science, a Graduate Diploma of Applied Finance and Investment and a Doctor of Philosophy degrees.

Dr Bojilov has co-authored the following articles: Improving Organizational Performance through Big Data, and Using AI to Automate Ethical Hacking. He also publishes the “Digital Transformation + Risk” newsletter.

Recently, in an exclusive interview with Digital First Magazine, Dr Bojilov shared his professional trajectory, what sets MBS Academy apart from other market competitors, personal role models, hobbies and interests, future plans, words of wisdom, and much more. The following excerpts are taken from the interview.

Dr. Bojilov, please share your background and areas of interest.

I am Dr Mario Bojilov, Founder and Chief Executive Officer of MBS Academy. My Bachelor’s Degree is in Electronics and Automation Engineering. After coming to Australia, I completed a Master of Engineering Science at the University of Queensland, focusing on Neural Networks. Furthermore, I recently received a Doctorate from Central Queensland University for research in using Artificial Intelligence (AI) to combat financial crime.

My background is in Audit and Risk in large enterprises and Government organisations. My areas of interest are using AI and Digital technology to reduce organisational risk and, more recently, working with Board Directors and Chief Audit Executives on using AI and building boards and teams with profound organisational impact.

Can you brief us about the mission and vision of MBS Academy? What sets it apart from other market competitors?

MBS Academy was founded in 2014 with the senior, non-technical professional in mind. The company’s mission is to support large enterprises and governments in improving collaboration, efficiency, and communication by giving business units the confidence to harness digital technology and AI.

What makes us different from our competitors is that our focus is on facilitation, not training. The instructor is the primary, active party in training, while the attendees are primarily passive. In our programs, we make the attendees the involved party, while the instructor is there to guide and provide wisdom. To achieve that, we apply the 4MAT framework, which divides the learning process into four stages: Why, What, How, and What If.

As the Lead Author, you developed the course structure and corresponding materials for the Shaping Success with AI course. What are its key benefits, who can apply, and how does it help organizations?

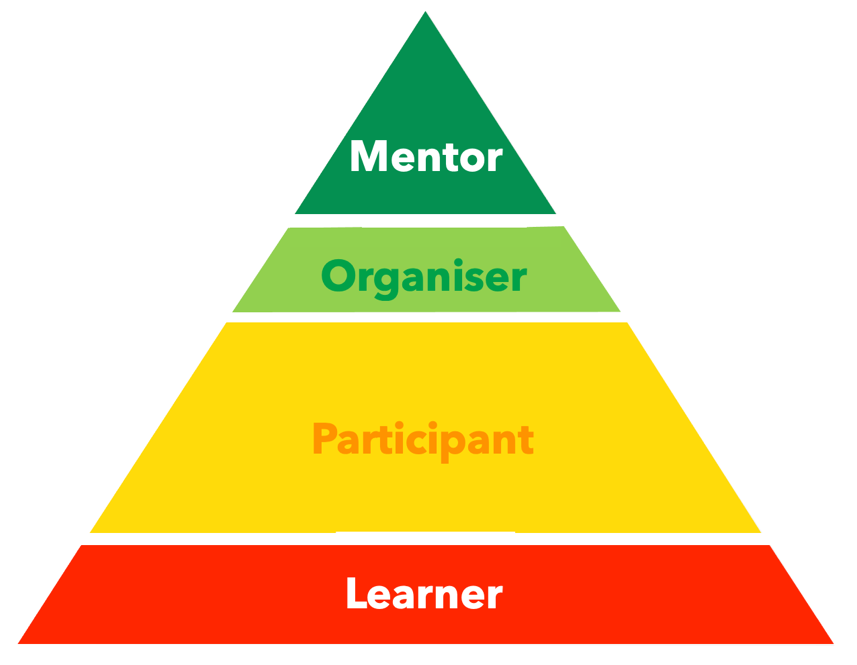

One of our flagship products is “Shaping Success with AI – A Framework for Visionary Boards”. As its name implies, we designed it explicitly for Board Directors. Its goal is to move the participants to the top of the pyramid shown below.

The framework provides three key outcomes, listed below, that will bring Directors to the top of pyramid.

Getting trained in the framework will give the Board Directors the expertise, the vision, and, most importantly, the confidence to guide their organisation in constantly changing and exciting times.

What is responsible AI versus what is AI governance? What are both of them and what’s the difference between the two?

Responsible AI refers to artificial intelligence systems’ ethical development and deployment, emphasizing fairness, transparency, accountability, and harm prevention. It involves the creation and training of AI designed and operated in a way that aligns with moral values and respects the rights and autonomy of individuals.

AI governance, on the other hand, is the broader framework of legal and organisational policies and procedures that ensure AI is developed and used in compliance with regulations and ethical standards. It contains the oversight mechanisms that control the impact of AI on society, managing risks and ensuring that AI systems function as intended.

Which industry sectors have seen the most development in AI-based products and services in your jurisdiction?

Consumers and organisations are constantly bombarded with new AI-based products. My personal interests lie in the applications of AI within the enterprise. In that regard, the sectors where AI is making the most significant inroads are financial services, tourism and travel. For example, the Flight Centre Group, one of the most significant Australian leisure and corporate travel businesses, has recently created the position of Head of AI and appointed Mr Adrian Lopez.

Which technology are you investing in now to prepare for the future?

At present, we heavily focus on Generative AI. At MBS Academy, we believe that it will provide a significant competitive advantage to companies using it strategically.

Who has influenced you the most in life and why?

The biggest influences in my life are Steve Jobs, Winston Churchill, and Benjamin Franklin.

What are your passions outside of work?

Outside work, I like water sports, particularly windsurfing, reading philosophy, and learning new languages.

Tell us about your future plans. Where do you see yourself in the next five years?

In the next five years, I will focus on growing MBS Academy and making it a well-known provider in the consulting and mentoring areas related to AI. I don’t see myself stopping to do this anytime soon.

What best practices would you recommend to assess and manage risks arising in the deployment of AI-related technologies, including those developed by third parties?

While bringing numerous benefits, the organisational deployment of AI also creates significant risks. This area is vast and continually evolving as governments, private organisations, and malicious actors constantly battle. For organisations and individuals looking for a starting point in this area, I would recommend familiarising themselves with the “The AI Risk Management Framework” by NIST and the draft of the “EU AI Act”. Both of them cover internal as well as third-party risks very well.