Backplain, the pioneering force behind the democratization of Generative AI, extends its innovative reach to organizations of every scale and sector. With a pioneering SaaS platform, it introduces an access hub for Generative AI, unleashing the full potential of Large Language Models (LLMs) like GPT-3 and GPT-4 that supports ChatGPT throughout entire enterprises. By forging seamless connections between applications and models, backplain empowers users to harness transformative Generative AI capabilities effortlessly, securely, and with unprecedented control – offering a nuanced alternative beyond binary choices.

Over the past three years, its sister services company, Epik Systems, has championed a spectrum of services encompassing AI consulting, cybersecurity, IT operations, software development, marketing strategy, and Customer Relationship Management (CRM) implementation. Primarily catering to small and medium-sized businesses (SMBs) with annual revenues below $100 million and employee counts up to 500, Epik Systems has cultivated an extensive footprint across diverse industries.

At the beginning of this year, discourse with leaders from varied services within Epik Systems client organizations converged upon the realm of Generative AI, particularly the landscape of Large Language Models (LLMs) and the unique utility of ChatGPT. “In the realm of marketing, where projections hint that 30% of sizeable organizations’ outbound marketing communications will be synthetically crafted by 2025,” remarks Tim O’Neal, Founder and CEO of Backplain, “one of our clients aspired to inject a significant boost into content generation and market research efficacy. However, concerns about potential pitfalls like misinformation, bias, copyright entanglements, plagiarism, source attribution, and transparency cast a shadow of hesitancy.” Tim elucidates, “A specific concern that loomed large was the specter of copyright infringement claims stemming from ChatGPT’s potential exposure to training data containing copyrighted text.”

With the addition of a CRM system encompassing customer service functionalities, the Customer Experience (CX) team – the dedicated guardian of customer satisfaction (CSAT) and net promoter score (NPS) – learned about the prospect of ChatGPT-4 shouldering the majority of routine inquiries. “The promise of reduced first response time, average handling times, and an elevated first contact resolution rate fueled their interest,” shares Tim. “Nevertheless, the intricate dance between automation and the cherished human touch, an integral facet of our approach, posed a perplexing conundrum.”

“Further, iIn scenarios of support decisioning, where automated processes wield substantial influence, apprehensions around Right to Explanation – a crucial doctrine governing decisions with consequential effects based solely on automated handling – came to the fore,” Tim adds.

Unsurprisingly, for many clients their engineering cohort had embarked on tentative forays into the realms of ChatGPT. Yet, Chief Technology Officers (CTOs) craved a more comprehensive blueprint for harnessing the benefits of Generative AI, not only for code generation, completion, refactoring, and documentation, but also for the complexities of debugging. “The thought of transforming arduous processes that traditionally consumed eight to ten weeks into expedited tasks within a week was irresistible,” Tim notes, sharing insights from interactions with development management. “Yet, the specter of potential hazards – lapses in code quality, vulnerabilities that might stealthily creep into generated code, and inadvertent data leaks – remained palpable.”

Undoubtedly, the epicenter of deliberations radiated from the IT echelons, where a steadfast stance against ChatGPT’s adoption had been firmly entrenched. “Given the imminent responsibility of overseeing the gamut of ChatGPT and other LLM applications, IT leadership grappled with the pivotal question: how to harmonize security imperatives and compliance mandates with the rich potential that ChatGPT augurs?” Tim emphasizes.

“A trajectory where CISOs and IT stewards in smaller enterprises grappled with a perceived role as inhibitors rather than enablers of progress underscored the urgency,” Tim emphasizes. As the ubiquitous tide of accessible LLM capabilities beckoned, an attendant shadow AI phenomenon necessitated proactive mitigation.

“When confronted with a prospect that affords precise control over access privileges, refined prompt engineering for optimal output, verifiable and controlled results, heightened model performance, and preemptive screening to thwart objectionable outputs,” recounts Tim, “the response was unequivocal – a resounding ‘yes please.’ And thus, the genesis of backplain was set into motion.”

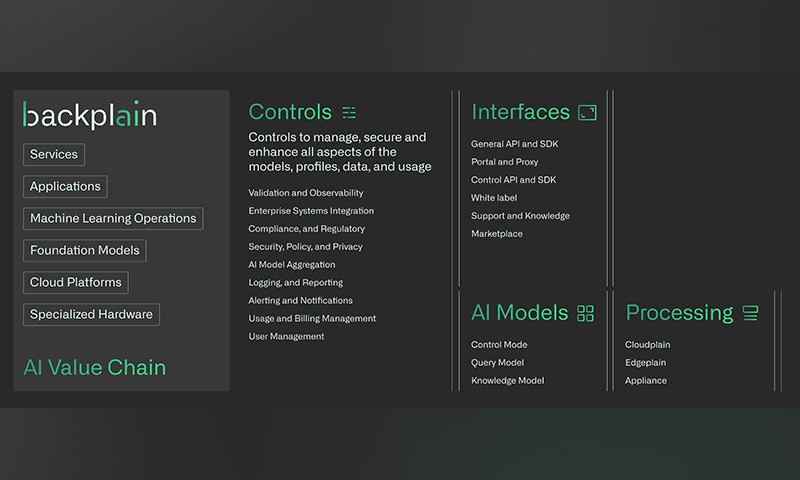

Technology Underpinning backplain

Analogous to a backplane – an assembly of parallel electrical connectors facilitating the linkage of multiple printed circuit boards, culminating in a comprehensive computer system – backplain functions as the connective tissue weaving numerous Large Language Models (LLMs) into a unified tapestry of Generative AI control.

Delving into the core essence of backplain, Tim elaborates, “backplain stands as a Software as a Service (SaaS) platform, providing IT departments with the reins of command over Generative AI’s utilization throughout the organization. This dominion encompasses the spectrum – ranging from immediate out-of-the-box model usage like the ChatGPT service in its native form, to the orchestration of custom-designed models, tailored or licensed to align exclusively with the organization’s distinct prerequisites.”

Tim sheds light on early developmental trajectory, stating, “With an extensive product roadmap laid out ahead of us, we embraced the reality that such a monumental feat needed to be accomplished by tackling them incrementally, analogous to consuming an elephant – one bite at a time.” He underscores the resultant pivotal triad of foundational pillars that carved the initial contours of backplain’s functionality.

- Ease of Use: The inaugural pillar reverberates with the resounding tenet of accessibility. Tim elucidates, “Our concerted efforts converged upon ensuring the platform seamlessly useable for both administrators and users. Within mere minutes, stakeholders can navigate the dashboard, wielding functional components with fluency and acumen.”

- LLM Aggregation: Tim recounts the catalytic spark that kindled a revolution, remarking, “The advent of ChatGPT, a collaborative venture between OpenAI and Microsoft in November 2022, served as the fulcrum that tipped Generative AI into mainstream consciousness. Subsequently, the landscape witnessed the meteoric rise of specialized LLMs – Cohere’s textual summarization prodigy, Google’s Bard, Meta’s LLaMA (Large Language Model Meta AI), and DataBricks’ Dolly 2.0.” He underscores the exponential proliferation of both public proprietary and open-source LLMs. Tim emphasizes, “Notably, the burgeoning arena of private LLMs – harboring bespoke architectures, training data, and processes – to facilitate meticulous fine-tuning, has emerged as an even more robust contender.” A key hallmark setting backplain apart lies in its unbiased command over this diverse array of LLMs. Tim envisions a dynamic utilization matrix, “Today’s GPT-3 might transition into GPT-4’s realm, followed by a rendezvous with BERT, a dalliance with PaLM 2, an interaction with LLaMA, and an eventual tryst with a private LLM.” He highlights the prescient capability conferred upon administrators, allowing selection of models from an ever-evolving roster, to support more and more use cases, while restricting individual users’ access privileges in accordance with organization mandates.

- Security and Compliance: Tim’s discourse converges on the paramount issue of security and compliance, championed through rigorous content filtering and meticulous screening mechanisms. “Empowering organizations to adhere to policy mandates,” Tim affirms, “backplain’s shield of control encompasses comprehensive logging and insightful reporting, illuminating the landscape of Generative AI deployment within the organization.”

Pioneering the Realm of Generative AI Governance

In an arena where contenders are scarce, Backplain stands as a trailblazer, steering the narrative toward empowering users while simultaneously entrusting control to IT custodians. Tim accentuates the unparalleled advantage this affords, particularly within a select spectrum of organizations. “A strategic first-mover advantage underpins our identity, with a clientele spanning the gamut from Enterprise entities surpassing $50 million in annual revenue and 100 employees to nimble SMBs nestled below this threshold,” Tim affirms. Noteworthy inclusions encompass a multinational Fortune 500 conglomerate, a prominent consumer goods company, and an emerging pharmaceutical facing competitive headwinds – all currently participating in an exclusive closed beta phase. With imminent open beta slated for later this month, and commercial launch soon after, heralds an epoch of transformative Generative AI.

Navigating a landscape evolving at breakneck velocity, Backplain’s intrinsic ability to adapt, evolve, and innovate with equal swiftness ensures its continual preeminence. “The dynamics of the Generative AI panorama necessitate a pace in parallel, and we remain primed to outpace our limited competition of today, as well as any inevitable contenders of tomorrow,” Tim articulates. This agile equilibrium between innovation and competition fortifies Backplain’s position as an indomitable industry protagonist.

Central to backplain’s proposition is an extensive product roadmap, undergirded by a steadfast commitment to be at the vanguard of Generative AI governance. “Our platform’s resonance with IT orchestration of Generative AI spans both present and future,” Tim affirms, encapsulating Backplain’s commitment to sustaining its pivotal role as a harbinger of effective control in the ever-evolving Generative AI landscape.

Catalyzing Transformation through Clarity, Compliance, and Cost Efficiency

Engaging in a transformative discourse, Tim spotlights the quintessential triad driving backplain’s initial client triumphs. “Our initial forays have seamlessly converged upon three pivotal facets,” he explicates.

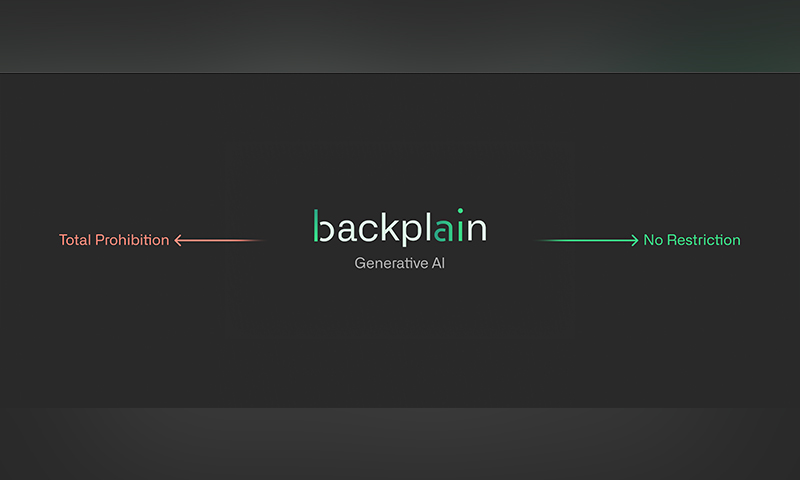

- Enhancing Visibility and Governance: As organizations transition from absolute prohibition to embracing the potential of Generative AI, backplain furnishes an invaluable prism. Tim explains, “Our platform delineates the trajectory from opacity to clarity, affording unprecedented insight into ChatGPT’s and other LLM application utilization. This engenders a shift from shadow AI practices to an environment of transparent and controlled utilization.”

- Elevating User Experience: Amidst this paradigm shift, users’ interaction with Generative AI transforms from mere utilization to a proprietary experience. Tim underscores, “Users are no longer just using ChatGPT; they’re engaging with the immersive realm of backplain.” This metamorphosis is a testament to backplain’s efficacy in enhancing user engagement and comprehension.

- Cost Efficiency and Performance Optimization: Tim accentuates the pivotal role of effective communication in harnessing Generative AI’s prowess. “The fulcrum of success resides in the quality of input,” he states. To maximize efficiency and minimize cost, backplain’s proactive insights guide users towards linguistic precision and strategic interaction. “Cost, intimately entwined with token consumption, aligns with average tokens per word. Language choice, succinct phrasing, and soliciting concise responses from ChatGPT-4 can yield savings of 40-90%,” Tim adds, unveiling backplain’s potential cost mitigation capabilities.

Forging Ahead: backplain’s Future Trajectory

In the uncharted terrain of tomorrow, Backplain’s course is defined by rapid growth and an expansive product roadmap, underpinned by two strategic initiatives poised to redefine the generative AI landscape.

- Illuminating the Path with General API and SDK Integration

Looming large on the horizon is the imminent launch of Backplain’s General API and Software Development Kit (SDK), a pivotal stride aimed to foster harmonious integration with enterprise applications. Tim elaborates, “Our vision extends beyond conventional boundaries, resonating with the intricate tapestry of enterprise systems. The release of our General API and SDK is poised to transcend traditional paradigms, extending the embrace of backplain to encompass seamless integration with multifaceted frameworks. This synergy finds a natural nexus within Customer Relationship Management (CRM) suites, unleashing transformative potential for customer engagement and experience enhancement.”

- Bridging the Digital Divide: Backplain’s Odyssey into Education

A transformative paradigm shift is on the horizon as Backplain sets its sights on the academic realm. School districts and universities are emerging as fertile ground for Backplain’s unique offerings, addressing a striking oversight prevalent in current generative AI implementations. Tim articulates this crucial facet, stating, “The landscape resonates with a recurring theme, mirrored across commercial organizations, where an overarching ban on ChatGPT prevails. Yet, an oft-overlooked aspect emerges, particularly within K-12 settings.” While employees can potentially bypass such bans by resorting to personal devices, the same cannot be said for K-12 students, many of whom lack the privilege of access beyond school premises.

Tim and his team believe Backplain’s groundbreaking endeavor can alleviate this digital inequity. “Backplain envisions a realm where access to ChatGPT, alongside other Large Language Models (LLMs) applications, extends within educational institutions. This strategic intervention holds the potential to dissolve the ‘digital divide,’ ensuring that K-12 students transcend the limitations imposed by access disparities.”

As Backplain’s narrative unfolds, its future shines forth as a beacon of integration and inclusivity, poised to redefine the contours of generative AI utilization in the realm of academia while seamlessly interlacing with the world of commercial enterprise.

For More Info: https://backplain.com/

About Tim O’Neal, CEO and Co-Founder of Backplain

Tim O’Neal is the forward-thinking CEO and Co-Founder of Backplain, an innovative technology startup specializing in Artificial Intelligence (AI). With 25+ years of experience as a technology expert and leader, Tim spearheaded rapid change and significant growth across large public healthcare, financial services, and education companies before bringing his own unique blend of strategic vision, industry insight, and dynamic ingenuity to help startups get to where they need to be faster – including his own. Tim has always advocated tech democratization, reflected in his previous San Diego-based startup, Kazuhm, founded in 2016 and dedicated to building an AI-powered distributed workload processing platform, and now Backplain. Championing this new startup in the burgeoning generative AI field, Tim has gathered an exceptional team of his former colleagues to launch an affordable, easy-to-use SaaS platform, providing the control plane (pun intended) to fully support useability, trust, risk, and security management of AI models, including Large Language Models (LLM) such as GPT-3, GPT-4, and LaMDA.